Introduction

OpenAI wrapped up its 12-day event by introducing OpenAI’s O3, their latest AI model, alongside its cost-efficient sibling, o3 mini.

One might think, why not O2 after O1? Well, it was not a random move. The designation “o3” was chosen to avoid trademark conflict with the existing UK mobile carrier named O2. The model is available in two versions: o3 and o3-mini.

Currently, O3 is available on registrations, for scientists and researchers for “Safety Testing”. OpenAI has released a statement inviting researchers to sign up for the waitlist to access the earliest versions of O3 for testing.

What is OpenAI’s O3?

Building upon the foundation laid by the O1 model, O3 introduces enhanced features designed to tackle complex tasks such as coding and scientific analysis.

A notable innovation in O3 is the implementation of deliberative alignment, a safety mechanism that surpasses traditional methods like Reinforcement Learning with Human Feedback (RLHF) by incorporating more comprehensive evaluation techniques.

The O3-mini variant offers a cost-effective solution without compromising performance, making it suitable for a range of applications. OpenAI has initiated a proactive approach to safety by granting researchers early access to these models for public safety evaluations prior to their full release.

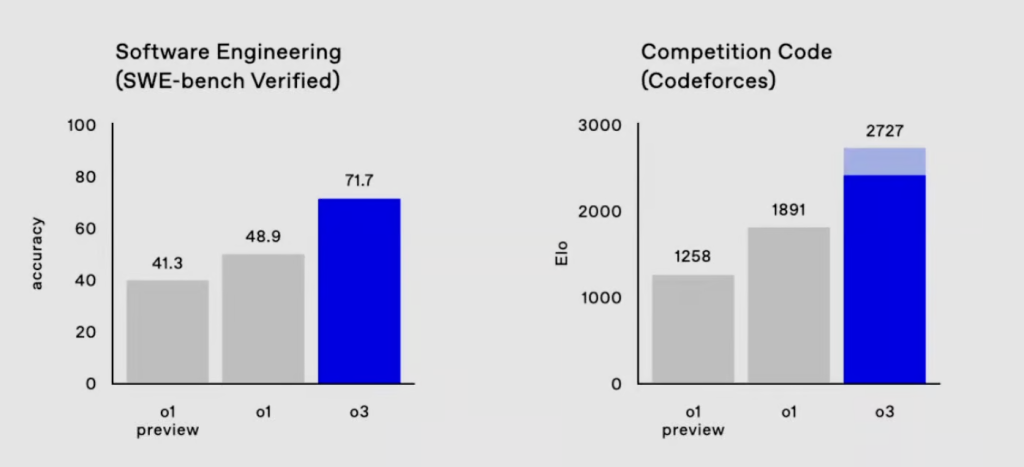

O3 demonstrates improved performance over the o1 model in complex tasks, including coding, mathematics and science. The below image released by OpenAI shows how the O3 model compares to it’s previous versions.

As we have already discussed, the O3 model is not available for general use just yet. It was specially announced for safety testing by researchers, applications for which are still open at OpenAI’s website.

O3 v/s O1 : How do they perform?

The O3 model builds on the foundation laid by the O1 model, offering several advancements in reasoning capabilities, safety mechanisms, and overall functionality. It’s prime focus is on the following arenas :

Mathematics and Science

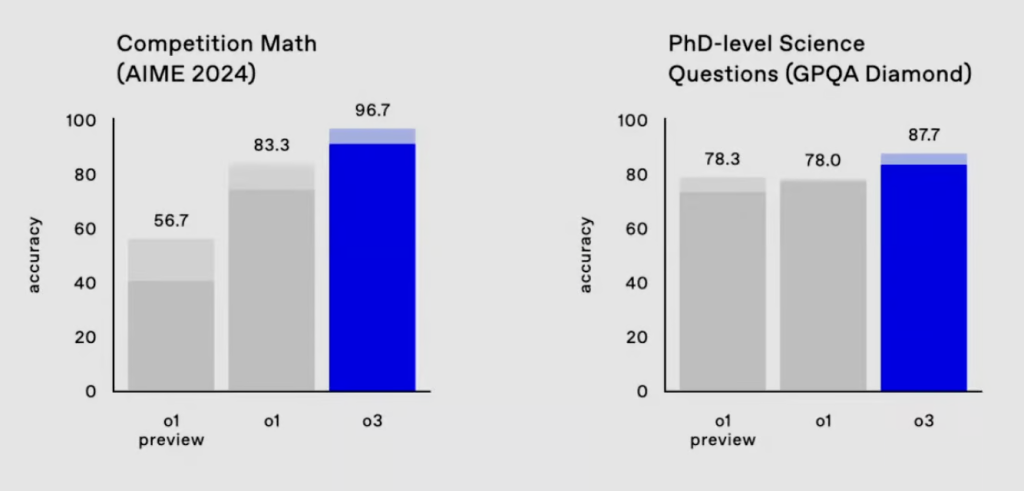

Due to it’s advancements in reasoning capabilities and better training process, O3 performs better than it’s counterpart O1. The following demonstrations released by OpenAI compare the performances.

The metrics are similar for science-related benchmarks. On GPQA Diamond, which measures performance on PhD-level science questions, o3 achieved an accuracy of 87.7%, up from o1’s 78%.

We can expand on the released knowledge as follows :

Advanced Mathematical Reasoning

- Complex Problem Solving: The O3 model is designed to handle intricate mathematical computations and reasoning tasks, enabling it to solve complex problems with higher accuracy.

- Improved Understanding of Mathematical Concepts: O3 can better grasp abstract mathematical concepts, such as proofs, calculus, and linear algebra, making it a valuable tool for researchers and educators.

- Precision in Calculation: The model has fewer errors in step-by-step computations, which is critical in domains where precision is key, such as engineering or financial modeling.

Enhanced Scientific Capabilities

- Scientific Analysis: O3 can interpret and analyze scientific data more effectively, thanks to its advanced reasoning abilities. This includes understanding scientific papers, performing data-driven analysis, and generating hypotheses.

- Handling Complex Systems: The model can simulate and predict outcomes in scientific experiments and systems, which is useful in fields like physics, biology, and chemistry.

- Coding for Science: O3 is proficient in generating code for scientific computing tasks, such as simulations, data visualizations, and algorithm implementations, helping scientists save time and resources.

Coding

O3 demonstrates significant improvements in various aspects of programming capabilities, particularly in handling complex tasks, understanding advanced coding concepts, and generating optimized solutions.

| Feature | O1 Model | O3 Model |

|---|---|---|

| Code Quality | Basic and functional code generation. | Optimized, modular, and scalable code. |

| Debugging | Limited debugging, required user intervention. | Accurate, step-by-step fixes with explanations. |

| Advanced Use Cases | Struggled with specialized tasks like AI or APIs. | Excels in handling complex applications and workflows. |

| Multi-Language Support | Basic support for popular languages. | Proficient in multiple languages and frameworks. |

| Code Explanation | Limited and basic-level explanations. | Detailed, insightful, and context-aware explanations. |

| Testing and Docs | Minimal test case generation and documentation. | Automated testing and comprehensive documentation. |

| Cloud and DevOps | Basic support for cloud tools and workflows. | Strong in cloud (AWS, GCP) and DevOps (Terraform, Kubernetes). |

EpochAI frontier Math

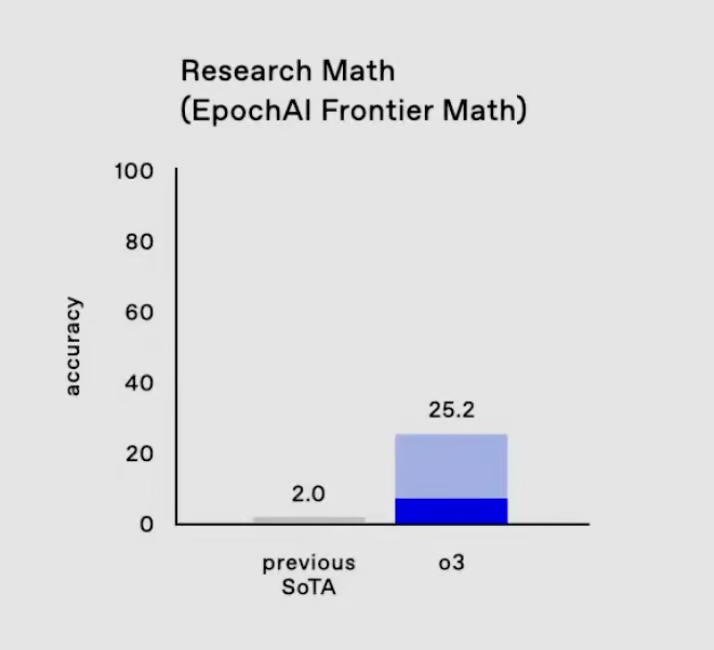

One area where o3’s progress is especially noteworthy is on the EpochAI Frontier Math benchmark.

Epic AI’s Frontier Math is important because it pushes models beyond rote memorization or optimization of familiar patterns. Instead, it tests their ability to generalize, reason abstractly, and tackle problems they haven’t encountered before—traits essential for advancing AI reasoning capabilities. o3’s score of 25.2% on this benchmark looks like a significant leap forward.

O3 on ARC AGI’s benchmark

On the ARC-AGI benchmark, which evaluates an AI’s ability to handle new, challenging mathematical and logical problems, o3 attains three times the accuracy of its predecessor.

As reported by New Scientist, O3 also scored a record high of 75.7% on the Abstraction and Reasoning Corpus (ARC) developed by Google software engineer François Chollet, a prestigious AI reasoning test, but did not yet complete the requirements for the “Grand Prize” requiring 85% accuracy. Without the computing cost requirements imposing by the test, the model also achieves a new record high of 87.5%, while humans score, on average, 84%.

What makes ARC AGI particularly difficult is that every task requires distinct reasoning skills. Models cannot rely on memorized solutions or templates; instead, they must adapt to entirely new challenges in each test.

According to TechCrunch, reinforcement learning was used to teach o3 to “think” before reacting using what OpenAI refers to as a “private chain of thought.” The model can allegedly plan ahead and reason through a task, carrying out a sequence of actions over a long period of time to assist in solving the problem.

OpenAI’s O3 Mini

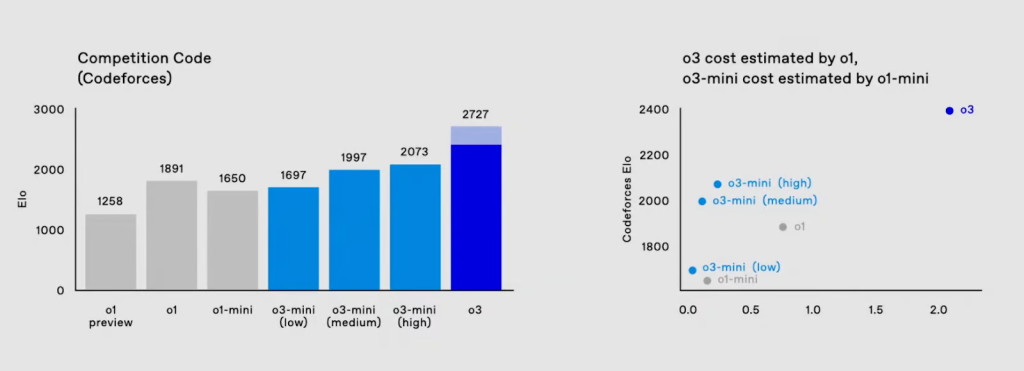

O3 mini was introduced alongside O3 as a cost-efficient alternative designed to bring advanced reasoning capabilities to more users while maintaining performance.

Until January 10, 2025, access is provided for safety and security researchers through an invitation-based testing program. OpenAI plans to release o3-mini to the public in January 2025.

OpenAI described the model structure as redefining the “cost-performance frontier” in reasoning models, making it accessible for tasks that demand high accuracy but need to balance resource constraints.

A standout feature of O3 Mini is its adaptive thinking time, enabling users to customize the model’s reasoning effort based on task complexity. For simpler tasks, users can opt for low-effort reasoning to enhance speed and efficiency.

The live demo showcased how o3 mini delivers on its promise. The benchmarks released by OpenAI showcase how the model performs.

For more complex tasks, higher reasoning effort options allow the model to achieve performance comparable to O3 itself, but at a significantly lower cost. This adaptability is especially valuable for developers and researchers for a wide range of use cases.

OpenAI’s O3 and O3 mini : The release date

From the official release, we can gather that O3 mini will be released by end of January 2025. It will be a cost-efficient solution, that can be accessed through the official OpenAI website.

As for O3, the main model, there is no specific date about it’s release, but we can expect it to be released shortly after the O3 mini model.

Currently, the O3 mini model is available to researchers on an “invitation testing” basis only. The model is being rolled out for researchers to test and give feedback on its performance and safety measures. The forms for the same are currently on the official OpenAI website (dated 20th December 2024).

Conclusion

OpenAI is advancing in its releases. We’ve already spoken about InstructGPT and SearchGPT, which bring a revolution and strong footing for OpenAI products in the market.

Similarly, we have the works of O3 almost on our fingertips. One can believe that these models will have faster-than-ever performances, allowing the tech landscape to skyrocket.

What is new, is the cautious rollout that we see OpenAI doing. This can bring the question of ethical responsibility, one of the many themes of AI agents in 2025. This release will be another exciting thing to watch, hopefully.